Artificial intelligence would detect hate speech and offensive words on social media and then encourage users to reconsider their posts as part of a new system that gives two Daniel High School students a chance to work alongside Clemson University researchers.

The system, HateDefender, is in its early stages, but the idea is to prevent the spread of hate speech on social media without resorting to censorship.

Researchers are wrapping up the first version of their system as the run-up to the U.S. presidential election inspires a fresh wave of venomous attacks. About four in 10 Americans have personally experienced online harassment and 63% consider it a major problem, according to a 2017 report from the Pew Research Center.

Once HateDefender is up and running, a social-media user who posts objectionable material would receive a warning that points out which words were potentially insulting. It would be up to users to decide whether to edit their comments.

“Too much censorship is not generally acceptable by users,” said Nishant Vishwamitra, a Ph.D. student who is working on the project. “Sometimes we need to explain the problem and at the same time give social networks the freedom to continue operating.”

If adopted, HateDefender would be embedded on social media sites. Users wouldn’t have to download an additional app or plug-in for it to function.

The team found that HateDefender accurately detects hate speech 90.82% of the time and offensive language 89.1% of the time.

The team has developed a new way of detecting hate speech and offensive language based on pronunciation. Researchers found that much of the speech they were targeting contained spelling and lexical errors that created “noise,” making it harder for the computer to detect.

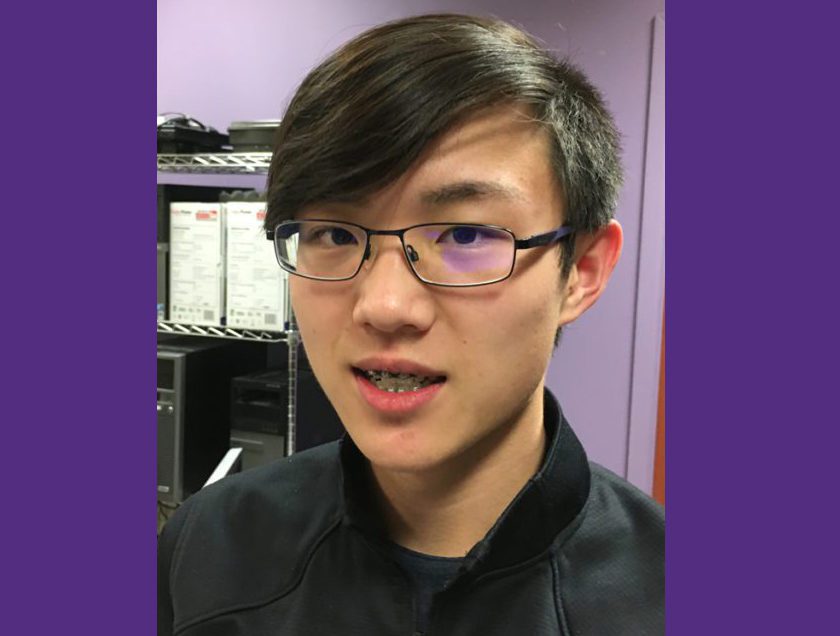

What also makes HateDefender stand out is the educational opportunity it presents to Daniel High students, which includes Roger Hu, who is working alongside professors and graduate students to create the system.

Hu, a junior, said he helped write papers, made graphs with data sets, labeled hate words and helped write code. It’s a unique experience that he plans to use throughout life.

“When I go to college, I want to major in computer science,” he said. “Because I’m interested in AI, I want to learn more advanced techniques in such an emerging area.”

The project is overseen by Professor Feng Luo from Clemson’s School of Computing.

The idea to focus on hate speech came from Matthew Costello, an assistant professor of sociology at Clemson. He approached Luo after conducting research into the online habits that lead people to come into contact with hate and become targets of hate. He also researched how people respond to hate.

“I had the idea that it would be great to auto-detect hate speech and then potentially block it, if people were interested in trying to block it,” Costello said. “Dr. Luo has the skills to do that.”

Amy Apon, the C. Tycho Howle Director of the School of Computing, said the project underscores the growing role of computing in a wide range of research, including in the social sciences.

“Working across disciplines and departments is becoming increasingly important,” she said. “Artificial intelligence and other advanced techniques are opening new doors for scientific discovery and helping create new products and services. HateDefender is a great example of a team effort that addresses a real-world problem while creating a unique educational experience.”

HateDefender and the method for detecting hate speech are detailed in two papers the team plans to present at an Association for Computing Machinery workshop and conference. Co-authors on both papers are Hu, Dorris, Vishwamitra, Luo, and Costello.

“I think it’s a huge experience for high school students,” Luo said. “They are learning what research is about and how to write a meaningful paper for publication. It helps establish an interest in research and gives them confidence that they can do it.”

The names of the papers are, “Towards Automatic Detection and Explanation of Hate Speech and Offensive Language” and “On the Impact of Word Representation in Hate Speech and Offensive Language Detection and Explanation.”

HateDefender is the latest artificial intelligence research to come from Luo, who invites about two or three high school students to work as interns each summer.

Luo’s team has applied some of the same artificial intelligence techniques to create BullyDefender, an app designed to counteract visual cyberbullying.

Get in touch and we will connect you with the author or another expert.

Or email us at news@clemson.edu