Nothing pairs better with a dystopian future than a cold, unfeeling artificial intelligence unbound by ethical constraints. But it’s easy to forget that the boogeymen of “2001: A Space Odyssey” and “The Terminator” are really just the products of bad coding by humans.

A Clemson University College of Education researcher is exploring how children in upper elementary school can learn how unethical decisions and design lead to AI applications that exclude people or even cause harm. Killer robots are just an extreme possible example that likely won’t crop up in the classroom.

Golnaz Arastoopour Irgens’ CAREER project funded by the National Science Foundation will use $1.4 million to co-develop curriculum with teachers and target grades 3-5 in order to help students understand how AI works and how they can think critically about it.

The curriculum that Arastoopour Irgens envisions brings the concepts to life by giving students a very sci-fi task in the classroom: teachers send their students to the future and ask them to report back on the flawed AI systems that they find. She said students thrive on a story in the classroom, so allowing them to use their imagination in a fictional future will help bring present-day examples to life.

“The hope is that students become a little more critical and questioning of the systems that are already around them,” Arastoopour Irgens said. “The earlier students understand these concepts, the more likely it is that they will spot these issues in real time and even build their own machine learning applications in a way that is inclusive of all people.”

Arastoopour Irgens points out that examples of harm caused by AI don’t require a time machine; they surround students today and range from mundane to life ruining. A search engine auto completing a result that appeals to only a certain group can be annoying or less useful, but racial biases in health care exacerbated by AI or facial recognition software leading to false arrest and imprisonment can ruin or end lives.

Arastoopour Irgens said that people of all ages tend to take AI at face value without considering the humans who designed it. The designers’ biases – intentional or not – are baked into the way an AI or machine works. This can be problematic if a population of designers is homogenous, and the programming industry still skews white and male.

Arastoopour Irgens said that these issues can and should be approached from the top down – changing laws, putting a diverse group of leaders in power who can change things – but her approach is from the bottom up.

“I want this work to get kids to question these technologies so that when they’re old enough to enact change, they might choose to design future AI technologies or just understand them better in the workforce where AI use will only increase,” Arastoopour Irgens said. “Approaching the work from this angle is a real dream project for me.”

The National Science Foundation’s Faculty Early Career Development Program grant, often called the NSF CAREER award, supports early-career faculty who have the potential to serve as academic role models in research and education and to lead advances in the mission of their department or organization.

Arastoopour Irgens’ project will take place over five years and focus on Title 1 schools in Anderson, South Carolina, which she said will be an ideal blend of rural and urban demographics. The first year of her participatory research plan will be focused on relationship building with districts, meeting with teachers and aiding them in the classroom while also introducing the concepts and the basic framework around the research.

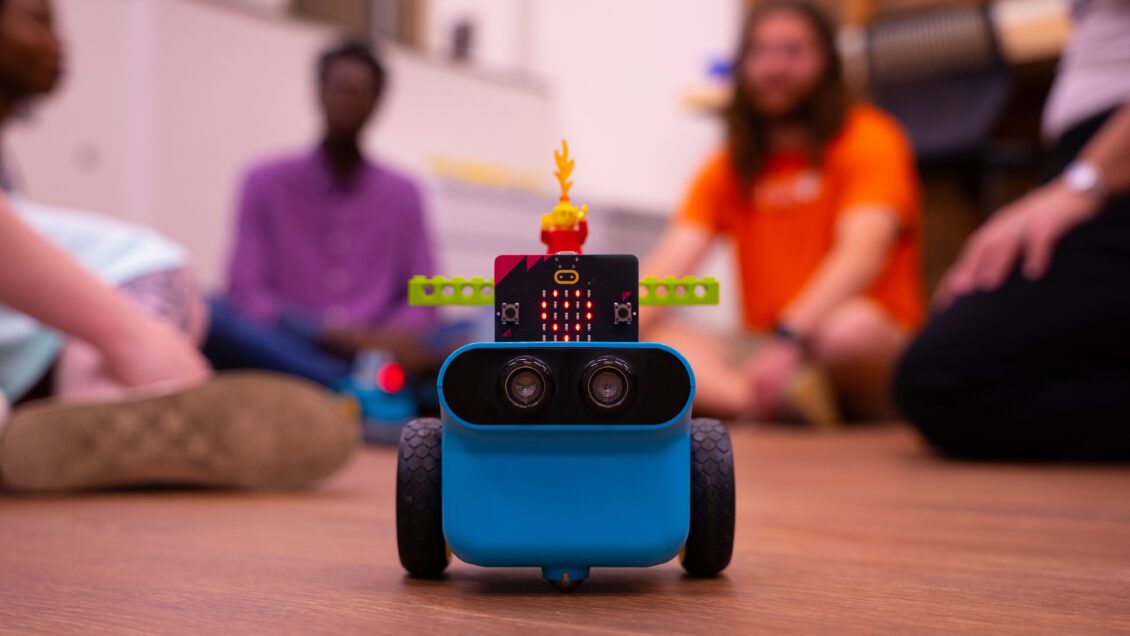

The design and implementation of the curriculum will include the “time travel” activity as well as students learning to code TPBot Cars, which are small, wheeled robots that students can direct to complete tasks using pre-built blocks of programming code. Students will be asked to use machine learning knowledge to create programs that work toward a social good, such as a companion robot to be used during social isolation or a grocery delivery robot for people with physical impediments.

“We are focusing this project and the curriculum on schools with diverse student populations because we want to reach children who are highly underrepresented in the tech industry whose voices aren’t heard for the most part,” Arastoopour Irgens said. “We want their values, beliefs and cultures to be embedded in the technologies they might one day design or those technologies they will certainly use on a day-to-day basis.”

Jeff Marshall, interim dean for the College of Education, said that Arastoopour Irgens’ CAREER award would stand out as a rare CAREER award in the field of education, but it is also one of the largest CAREER awards in NSF’s history. Marshall earned a CAREER award in 2010 focused on creating sustainable, inquiry-based instruction.

“Golnaz’s work better equips students with knowledge they can apply to the rest of their lives, and her research stands out because it combines digital literacy and active teacher involvement to address issues around inclusion and equity,” Marshall said. “This recognition and award are well deserved and will aid her to further break ground that is truly new in education.”

For more on Arastoopour Irgens’ research and to visit her Inclusive Digital Education and Analytics (IDEA) Lab, click here. Joining Arastoopour Irgens in the IDEA lab are Ibrahim Oluwajoba Adisa, Cinamon Bailey, Tolulope Famaye, Alison Fecher, Caitlin Lancaster, Katherine Mulholand, Deepika Sistla and Ian Thompson, who are all graduate student researchers at Clemson University. Hazel Vega serves as a grant project manager in the lab.

This material is based upon work supported by the National Science Foundation under Award No. 2238712.

Get in touch and we will connect you with the author or another expert.

Or email us at news@clemson.edu